Maximising the value of work not done

Measuring the impact of strategic rejection

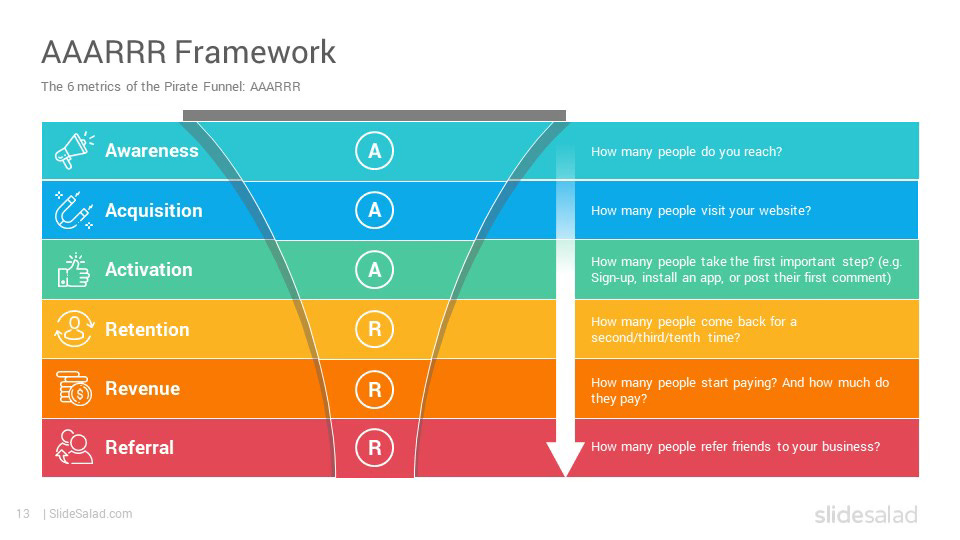

I once consulted with a team on their newly launched SaaS product and introduced them to Dave McClure’s pirate metrics to measure their user funnel. Pirate metrics break the user lifecycle into five stages:

Awareness - getting people to know you exist

Acquisition - getting them to visit your site

Activation - converting them into users

Retention - ensuring they come back in sufficient numbers

Revenue - monetising their activities

Referral - having them act as ambassadors and references for the product

The team was seeing growth across the funnel, but they felt their retention numbers could be better. In-product surveys also gave them feedback about the need for more advanced analytics within the product. They dug into their retention numbers to verify if this was driving the retention drop-off and found that their abandonment was highest among users who wouldn’t have progressed far enough through the funnel to see any of the analytics in the product. What was going on?

They interviewed the users looking for investment in analytics and researched the concerns of the cohort of early abandoners. They found that there were two distinct issues in the funnel:

some users loved the product but couldn’t do the kind of analysis they wanted on the data they were finding. There was no export functionality allowing them to easily push data to an external tool, so they effectively felt stuck.

there was a large cohort of people who signed up for the product, got confused about the value proposition, and abandoned it before getting any value.

The team had limited resources, so couldn’t tackle both issues simultaneously. One was going to have to take priority. There were compelling arguments for both, and they debated long and hard. They repeatedly reached a stalemate when discussing which was the better bet. They couldn’t agree how to choose. This is where many teams get stuck, or worse, try to do it all.

Reframing the question

One day, trying to break the logjam, I asked “How do you maximise the value of work not done?”

In other words, which of these issues could they afford to put on hold while solving the other? Taking this perspective, it became obvious that while they ran the risk of losing some users by not upgrading their analytics, they would struggle to survive, let alone grow the product if they didn’t fix their activation flow to make the value proposition easier to understand.

It was clear that they needed to resolve the confusing messages in their activation flow. I made the point that it didn’t make any difference how beautiful their cooking was if they couldn’t get their dinner guests through the front door.

They realised that they could quickly build an export function that would enable users to export data. At the same time, they would rebuild the activation part of the funnel, and delay any further investment in analytics within the application.

This wasn’t without risk. It meant a fundamental change to the pattern of change the team usually delivered. Instead of releasing small increments quickly, they would be ‘going dark’ on product features for a reasonable amount of time, and then relaunching the product.

Measuring the power of ‘no’

The attrition rates of users who had invested time in the product wasn’t the biggest problem they faced; it was actually quite low. The abandon rate of new, expensively-acquired users was a significant drag. They hypothesised that they could double the amount of users who would complete the activation flow. This would have the happy result that advanced analytics would serve the needs of ever-higher numbers of users. Eventually, they could have the best of both worlds.

Their strategic ‘no’ was in fact building toward a larger strategic ‘yes.’ They were maximising the value of work not done. The challenge for them was to demonstrate that they had made the right decision.

So, how should these choices be measured? What’s the best way to measure the value created by a strategic ‘no’?

There are four dimensions that should be considered:

Learning efficiency

Customer outcomes

System health

Team effectiveness

These give a complete picture of the impact of the decisions you make. Learning efficiency enables you to validate quickly whether you’re on the right path. Customer outcomes demonstrate whether you chose the right problem, and it’s having the right impact. System health demonstrates you built it sustainably. Team effectiveness confirms that you have the correct environment for continued success.

Learning efficiency

Learning efficiency is about your time to qualify. How quickly can you tell whether you’ve made the right call? What are the early indicators you can use to suggest that you’re on the right path?

In the SaaS business, the team had user research and historic retention rates to guide their choice. They instrumented the activation flow so that they could see exactly where confusion arose. Interviews with abandoners underlined the problems that required attention. They tested different flows before building anything, so that the most promising solutions would guide their development.

Customer outcomes

Customer satisfaction rates give good insight into the quality of your choices. Strong customer satisfaction or Net Promoter Scores indicate that you’re solving problems your customers and users care about.

Removing frictions from the AARRR funnel is key. Many customers won’t bother filling in customer satisfaction surveys if they’re annoyed. They’ll simply stop using your service. A combination of pirate metrics (or similar) and customer satisfaction will give you a strong sense of how customers view your choices.

It’s important to measure the impact against your original hypothesis. Were your forecasts accurate? Can you demonstrate or appraise the impact of your changes on your company’s bottom line?

In the SaaS business, the team had forecast that their retention rates would double. In fact, they tripled while customer satisfaction scores stayed stable. These metrics gave the team confidence they had made the right choice.

System health

Saying no to ideas that don’t drive you forward reduces technical debt and the cognitive load of the team in managing the application. Focusing on what matters will lead to an increase in quality.

In the SaaS business, ‘going dark’ on new product features gave the team the ability to focus on their most important problem, delivering an enhanced technical solution as well as solving the customer-facing issue. This should be an aspect of every choice you have to make - how can we reduce the technical burden of the system?

Team effectiveness

Narrowing the focus of teams through strategic choices ensures they can operate more effectively by ensuring that there are fewer context switches. By keeping the team focused on a single goal, you build confidence and there should be less noise coming from outside the team, and therefore fewer wasted features, built without evidence or a driving hypothesis.

By ruthlessly prioritising, you can ensure that the team ignores any other feature requests. Like the team in the example, you can continue to work on prioritised and promised capabilities, researching and preparing for the next big initiative, while keeping the engineers focused on the promised work. The team did this by ensuring there was absolute clarity about completing the activation work before any further engineering effort would go into analytics.

The broader lesson

Organisations need to accept the value of strategic rejection. If “you can’t prove a negative,” it robs you of the ability to say no. No customer requests will ever be refused. No stakeholder ideas will ever be challenged, however much evidence there is that they’re not meeting customer needs. Worse, there is no room for any failure. Every launch, every new feature, must succeed. Psychological safety withers. Experimentation never happens. Research is ignored. The rise of the feature factory is inevitable.

This manifests itself in insidious ways in larger organisations. The disciplined Product Manager that says ‘no’ to ideas that haven’t been even half-baked gets passed over for promotion in favour of the one who lets their product bloat with barely-used features. PMs who look for data and evidence to power their hypotheses are criticised for being slow, while other teams build a Frankenstein’s monster quickly, just piling releases one after another, with no coherent strategy.

What can we do to fight back when everything becomes urgent, and everything must succeed? How do we demonstrate the value of disciplined prioritisation? As the B2B SaaS team did, you need to demonstrate the positive contribution that your choice made to the organisation. Using the above metrics makes it clear whether your bets paid off.

In the SaaS business, the team had to deal with concerns from customers as they waited for their in-app analytics to improve. By giving them a data export function and continuing conversations, they were able to iterate quickly on different solutions for different market segments. The wait was worthwhile because they built something better. They made sure they had guests moving through the front door, then served them a feast. Strategic rejection didn’t slow them down. It gave them time to cook something worth serving.